Deep Neural Networks are automatically performing feature engineering within the network themselves. We use the Compressed Sparse Row aka CSR for short to represent sparse matrices in machine learning for the efficient access and matrix multiplication that it supports.

Illustration Of The Sparse Matrix Format A Example Matrix Of Size Download Scientific Diagram

You can use it for example to calculate personalized recommendations.

. There is an extensive body of work in optimizing SpMM for scientific workloads Various sparse matrix storage formats have been proposed to reduce the memory and computation overhead of SpMM 14 19Studies have also shown that choosing the right storage format can have a significant impact on the SpMM performance Although SpMM. Turns out there are 2 significant kinds of matrices. 1 sparse models contain fewer features and hence are easier to train on limited data.

Matrices are used in many different operations for some examples see. Answer 1 of 3. Scipy package offers several types of sparse matrices for efficient storage.

Our predictor predicts at runtime the sparse matrix storage format and the associate SpMM computation kernel for each GNN kernel. In a trained model those patterns are encoded into a complex network of parameterized functions which can guess the value of a missing piece of information. COST-SENSITIVE MACHINE LEARNING Balaji Krishnapuram Shipeng Yu and Bharat Rao COMPUTATIONAL TRUST MODELS AND MACHINE LEARNING Xin Liu Anwitaman Datta and Ee-Peng Lim MULTILINEAR SUBSPACE LEARNING.

Sklearn and other machine learning packages such as imblearn accept sparse matrices as input. In light of this observation we employ machine learning to automatically construct a predictive model based on XGBoost 7 for sparse matrix format selection. Section 23 Matrix operations.

Many linear algebra NumPy and. Therefore when working with large sparse data sets it is highly recommended to convert our pandas data frame into a sparse matrix before passing it to sklearn. A sparse matrix is normal in ML or Machine Learning.

Model training is a process where a large amount of data is analyzed to find patterns in the data. A Gentle Introduction to Matrix Operations for Machine Learning. In scikit-learn for example linear methods can be used with both dense and sparse matrices and they leverage the fact that the input is sparse there exist solvers specifically for sparse matrices.

SciPy provides tools for creating sparse matrices using multiple data structures as well as tools for converting a dense matrix to a sparse matrix. Matrix factorization can be used with sparse matrices. I have often heard that Deep Learning Models ie.

Perhaps the most well known example of sparse learning is the variant of least- squares known as the LASSO 41 which takes the form 1 min kXT yk2 2 k k. Sparse Learning Methods In this section we review some of the main algorithms of sparse machine learning. For this reason the solution of sparse triangular linear systems sptrsv is one of the most important building blocks in sparse numerical linear algebra.

Min rank M st. The usefulness of sparsity is even reflected in implementations. For example the entropy-weighted k-means algorithm is better suited to this problem than the regular k-means algorithm.

There are quite a few reasons. Since I cannot see the actual data behind sparse matrix I had to scale them first because index. I used to do this with python but there seems to exist packages for R as well.

Some versions of machine learning models are robust towards sparse data and may be used instead of changing the dimensionality of the data. P in the constraint is an operator that takes the known terms of your matrix M and constraint those terms in M to be the same as in M. I had to encode 7 features in One Hot thus it created sparse matrix as a result.

This is contrasted with traditional statistical and Machine Learning models where the feature engineering is typically done prior to training the model. Introduction to Matrix Types in Linear Algebra for Machine Learning. Generally for sparse high dimensional dataXGBoost works great as it provides seperate path for sparse data and better than that you can try with LIGHTGBM model which can.

This section provides more resources on the topic if you are looking to go deeper. Support Vector Machine SVM is a classical machine learning model for classification and regression and remains to be the state-of-the-art model. Many of our models are trained on sparse data meaning that the data has a lot of fields.

Unfortunately in R few models support sparse matrices besides GLMNET that I know of therefore in conversations about modeling with R when the subject of sparse matrices comes up it is usually followed by the glmnet model. Fewer features also means less chance of over fitting 2 fewer features also means it is easier to explain to users as. Sparse classi cation and regression.

Recent work has adopted automated techniques that use supervised Machine Learning ML models for predicting the best sparse format for a given matrix on a target architecture 3242830 38. Sparse features are common in machine learning models especially in the form. Plataniotis and Anastasios N.

Throughout the years the sptrsv kernel has been implemented for almost all relevant parallel platforms 16. This algorithm minimizes the rank of your matrix M. While they happen normally in some data collection measures all the more regularly they emerge while applying certain data change strategies like.

M is the final result and M is the uncompleted matrix you currently have. Sparse Matrices in Python. Sparse matrices have lots of ZERO 0 values.

DIMENSIONALITY REDUCTION OF MULTIDIMENSIONAL DATA Haiping Lu Konstantinos N.

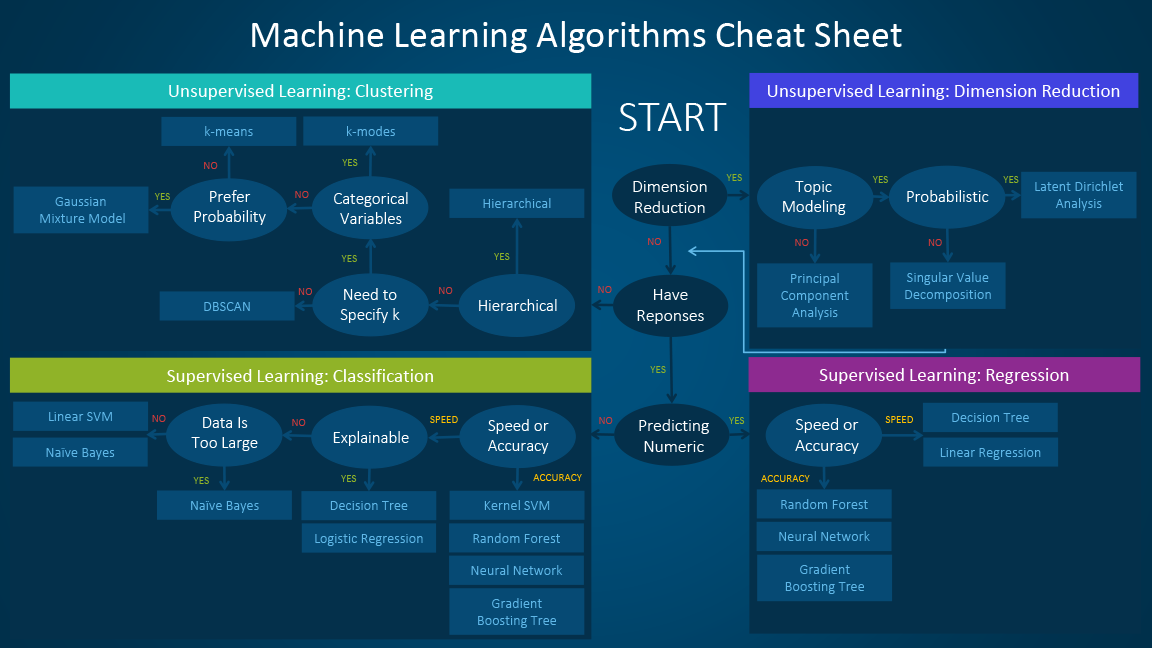

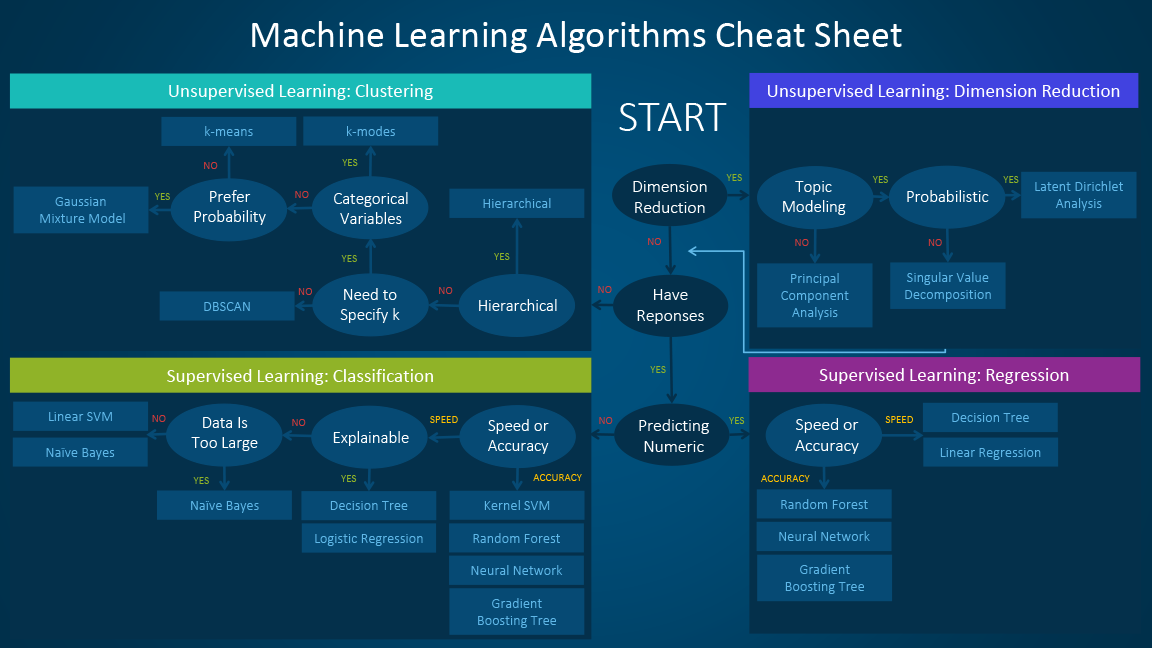

Which Machine Learning Algorithm Should I Use The Sas Data Science Blog

A Comprehensive Introduction To Different Types Of Convolutions In Deep Learning Deep Learning Matrix Multiplication Spatial Relationships

0 Comments